JavaRanch Journal - April 2004 Volume 3 Issue 3 (or something)

Service your application with Jini

Lasse Koskela

Accenture Technology Solutions

Copyright ? 2004 Lasse

Koskela

Abstract

One of the latest IT buzzwords has been Service-Oriented

Architectures (SOA), which essentially suggests that applications built against

services instead of components is a potential way to build more robust

and flexible systems. While SOA has recently been tightly associated with Web

Services, the concept of structuring systems as services distributed across the

network isn't anything new. Jini network technology has been around for

half a decade already and has proven itself as a robust technology for

implementing the low-level wiring of distributed Java applications. If you have

missed the Jini train so far, here's a little intro to get you up to speed with

the Jini architecture within minutes, even.

Jini what?

In short, Jini is a technology for distributed computing on the Java

platform. The word "Jini" is not an acronym although someone allegedly tried to

suggest "Jini Is Not Initials" after the fact :)

As is typical for the Java world, Sun Microsystems has provided a

specification and an API which vendors are free to implement to their best

capability. A significant part of such a specification is the set of concepts

embodied in the architecture and corresponding APIs. Let's take a look at that

first, before going into the details and, eventually, (drum roll, please) the

code.

Oh, and before we go any further, let me apologize beforehand if I seem too

enthusiastic about Jini. That's only because I am.

The Jini Architecture: Concepts

The holy trinity

Since Jini is a distributed architecture by nature, it's not too difficult to

guess that essential parts of it are some sort of servers and the corresponding

clients. In Jini, we call these "servers" as services and, well, the

clients are just that. Clients.

So, what is the third party in our trinity, then? Like in many other

service-oriented architectures, the clients need some way of locating the

services they need. Jini has the concept of lookup services. These lookup

services are similar to CORBA's Naming Service, Java's JNDI trees, and UDDI

registries. In fact, you could consider Jini's lookup services being sort of

"live" versions of the above-mentioned alternatives.

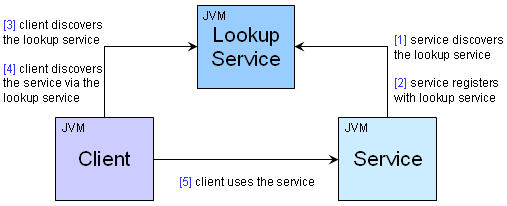

Figure 1 illustrates the dependencies and communication between the

client, the service, and the lookup service.

Discovery

Jini APIs provide two ways of discovering services: unicast and

multicast. In the unicast scenario, the client knows where to search for

services -- it knows where the lookup service can be contacted. In the multicast

scenario, the client is not aware of a lookup service and relies on IP

multicasting a query to which available lookup services respond. Once the client

has discovered the lookup services, it then proceeds to discover the actual

services registered with these lookup services. Obviously, before the clients

can discover services via a lookup service, the service must register itself

with the lookup service using the Jini API.

Ok. The service has registered itself with a lookup service. Now how will the

client know that this particular service is what she wants to use? The answer

lies in the way services register themselves, providing three possible

"identifiers" for the clients' disposal, namely a service ID, the service item,

and a number of entries:

- Entries are basically properties describing the service or its particular

implementation. For example, a CarWashService might advertise itself with

entries such as "We're open 24/7" and "We're located in Boulder, Colorado".

The Jini API includes a number of built-in entry types but you are free to

write your own, custom entry types implementing a marker interface.

- The registrar can also pass a service ID to the lookup service, or

alternatively gets assigned one. This service ID can be used to identify a

specific service the client wants to use -- if the service ID is "known" by

the client.

- The most important identifier, however, is the service item. When a client

is looking for a service, she's actually looking for an object that implements

an interface. In Jini, this interface can be a Java interface or a Java class

(although the former is the more common and definitely the suggested one).

When doing a service lookup, the client indicates the interface or class the

service item must implement or extend.

The multicast discovery feature of Jini's discovery mechanism allows

implementing completely dynamic, self-healing network of systems and

applications. The fact that you can get "connected" to a bunch of lookup

services (which are just like any other Jini service, by the way) by simply

shouting out loud, "any lookup services out there?", is what makes Jini's

discovery mechanism such a powerful tool for building such systems.

I'll get back to the Jini lookup service later in this article to discuss

these unique features in more detail. For now, let's move on.

Leases

What happens to a service registration once it has been created in the lookup

service? The answer depends on two things: how the lookup service manages

registered services and whether someone is looking after the service's

registration.

When a service is registered with a lookup service, the lookup service

assigns a lease, a ticket which says "I'll keep this service registration

in my records for X minutes. After that, it's gone." The Jini API lets the

service request a preferred lease length but the final say is on the lookup

service. Generally, lookup services don't accept "eternal" registrations but

enforce some kind of a limit, which can be anything between 60 minutes to 4,32

days.

Right. Leases. But what does it actually mean that the lease expires? For

clients who have already discovered their services, the expiring lease does

nothing -- the client uses the service directly without any participation from

the lookup service it located the service through. However, since the lookup

service drops the registration when a lease expires, a new client will not

discover the service until it re-registers itself with the lookup service.

Ahh, I see. But how does the service re-register itself, then? When a service

registers with a lookup service for the first time, it often lets the lookup

service assign a service ID to the service in question. If the service is down

during maintenance, for example, and wants to re-register with the lookup

service, it simply provides the same service ID that it had previously and the

lookup service will "replace" any existing registration for that service ID. If

there are multiple lookup services to register with, the service should use the

same service ID for all of them. That way, a client discovering services through

the multiple lookup services can easily recognize the available implementations

for a certain service, identified by its "global" service ID.

In fact, leases are an essential part of Jini's self-healing property!

Consider a scenario where a registered service suddenly becomes unavailable due

to a network problem or a system crash. With short leases, the lookup service

will soon notice that the service isn't available anymore and doesn't refer any

clients to it. Similarly, when the service becomes alive again, it re-registers

with the lookup service and the lookup service starts referring clients to it.

Better yet, the client's who need to use a currently unavailable service can

register a distributed listener with the lookup service, which gets

called when the required service becomes available. Now that's what I call

self-healing! Think, "I don't have any color printers for you right now, but

I'll let you know when I do!"

The Jini Architecture: Technologies

The wire tap: what's going on in there?

I mentioned earlier that Jini is a distributed computing technology for

Java applications. While that is certainly the sweet spot for this

exciting technology, Jini can be used for exposing native legacy applications to

the new breed of Java-powered enterprise applications.

Having said that, Jini does rely on Java's RMI (Remote Method Invocation) for

implementing essential parts of the architecture. Service registration involves

downloading serialized Java classes to/from the lookup service as does the

service discovery process performed by the clients.

Well, if the service must talk Java and the client must talk Java, how can

Jini help "expose native legacy applications"? The proxy is the key. When a

service is registered with a lookup service, the registrar stores a service

item, a serialized Java object, into the lookup service's database. This

service item can be any type of Java object as long as it's Serializable. When a

client looks up the service, the lookup service passes a copy of the service

item object to the client. The client only knows that she received an

implementation of whatever interface the service promised to implement. The

client has no idea how the object implements the service.

This abstraction leaves room for a variety of approaches to implementing the

service: the service item might have the ability to provide the service all by

himself, or the service item might just act as a thin proxy passing method calls

over the network to the actual service. Or something in between. It is the thin

proxy approach that makes Jini such a nice way of exposing legacy applications

to other systems within an enterprise. If the service item today communicates

with the legacy applications over raw sockets and tomorrow the legacy

application has been replaced with a web services-enabled version, the service

is simply re-registered with an updated version of the service item and the

clients won't know the difference!

Security

Jini involves downloading code from "the outside world" and executing that

code. This is an obvious sign for the need for security. So, how do we prevent

our client from executing malicious code?

The security in Jini is based on the J2SE security manager, namely,

java.rmi.RMISecurityManager. The client application should have as

restricted policy file as possible. In practice, most developers tend to

start development with an all-forgiving "policy.all" file, which permits

practically anything, and tighten up the security in the end. This is the

approach we'll use in our example later in this article -- although I'm going to

skip the latter part, tightening the security :)

While the security features in Jini 1.x were limited to what can be done with

the policy file ("only allow downloading code which is coming from server X and

signed by Y"), the Jini 2.0 specification brings along a number of additional

tools for making your Jini network secure, including:

- Dynamic permissions (grant permissions only after determining whether the

given proxy can be trusted)

- Security constraints (both parties state what kind of constraints they

require and the protocol used is negotiated based on these requirements)

- HTTPMD (a HTTP protocol in which the URL is attached with a message digest

to provide for data integrity, which accomplishes the same integrity

properties as HTTPS but in a more lightweight manner)

- Proxy trust (client asks the service whether she trusts the proxy

the client received, supposedly, from the lookup service)

Example: Fibonacci sequence as a service

As an introductory example, I decided to use the mathematical problem of

calculating the value of a Fibonacci sequence. The Jini client wants his

sequence calculated and the Jini services provide the ability to do so. To make

the example a bit more illustrative, I've actually written a number of different

implementations for our Fibonacci interface just like there could be a

number of different implementations of your real world service.

For those who don't quite remember what the Fibonacci sequence is all about,

here's a brief description:

F(1) = 1, F(2) = 1, F(n) = F(n ? 1) + F(n ?

2)

In other words, except for F(1) and F(2), which are special cases, the

nth number in the sequence is the sum of the two previous numbers.

F(n) = 1 + 1 + 2 + 3 + 5 + 8 + 13 + 21 + ...

System requirements for our example

Obviously, developing against the Jini APIs requires having these APIs

available for the Java compiler. Furthermore, in order to make a Jini client

work with a Jini service, we need the third wheel -- the lookup service. Still,

in order to download code we need to serve it up from somewhere, which is why we

need an HTTP server of some sort.

Even though Jini 2.0 has been out there for quite some time already, I chose

to use the older Jini 1.2.1 release for the example. The reason being that the

1.2.1 distribution is much easier for a beginner to grasp than the latest

release, which is a lot bigger (for a reason). The code in this example should

be compatible with Jini 2.0, however, with very minor changes if any.

I should probably also mention that while all example scripts/code use

"localhost", this, in fact, is something you should never do in real development

-- in a distributed environment "localhost" means a different thing to each

participating computer...

Installing the Jini Starter Kit

You can download the Jini Starter Kit from http://wwws.sun.com/software/communitysource/jini/download.html

and unzip the archive somewhere on your harddisk. I put mine in

"C:\Apps\jini-1.2.1_001" but the actual location doesn't matter.

Now that you have the starter kit in place, let's take a look at how we can

get the lookup service running and a HTTP server serving our code.

The lookup service reference implementation, part of the Jini Starter Kit, is

called "Reggie". Reggie is basically an executable JAR file which takes a few

simple command-line arguments to start up (see my batch file below). startLookupService.bat:

@echo off

set REGGIE_JAR=lib\reggie.jar

set POLICY=policy.all

set CODEBASE=http://localhost:8080/reggie-dl.jar

set LOGDIR=reggie_log

set GROUPS=public

java -Djava.security.policy=%POLICY% -jar %REGGIE_JAR% %CODEBASE% %POLICY% %LOGDIR% %GROUPS%

Looking complicated? It's not. Let's go through the parameters one by one and

see what they mean.

| REGGIE_JAR |

This is the executable JAR file which launches the lookup

service. |

| POLICY |

This is the all-forgiving policy file used for development.

Its contents are simply:

grant

{

permission java.security.AllPermission "", "";

};

|

| CODEBASE |

This is the RMI codebase from where clients can download

the stubs for Reggie (yes, Reggie is accessed over RMI). |

| LOGDIR |

This is the directory which Reggie will use as its

database. It can not be an existing directory (Reggie will complain about

it and refuse to start if it finds the directory exists already). |

| GROUPS |

The Reggie lookup service has a concept of groups.

For now, let's just use the default group named "public".

|

All set? Almost, but not quite. Since Reggie relies on an RMI server to run,

we need to start one. Here's a command for starting up the RMI daemon,

rmid, on the default port (1098): startRmiServer.bat:

@echo off

rmid -J-Djava.security.policy=policy.all -J-Dsun.rmi.activation.execPolicy=none -log rmid_logs

Note: Reggie only needs to be registered with the RMID once! After

that, the RMID remembers Reggie and that it should activate it when needed --

even after restarting the RMID. This is also the reason why

"startLookupService.bat" returns after a few seconds -- it doesn't need to keep

running because the RMID will take care of invoking it when the time comes!

Finally, we need an HTTP server to download the code from (both for using

Reggie and for our services). Fortunately, the Jini Starter Kit includes a

simple HTTP server which serves files from a given directory and nicely prints a

log of each downloaded file: startHttpServer.bat:

@echo off

java -jar lib\tools.jar -port 8080 -dir webroot -verbose

For now, copy the reggie-dl.jar from %JINI_HOME\lib into the HTTP

server's content directory ("mywebroot" in my case) so that clients can download

the stubs for using the lookup service.

Now we should be all set. Try running the batch files

(startRmiServer.bat, startLookupService.bat, startHttpServer.bat) and see that

you don't get any pesky little error messages. If you do, double-check your

scripts, delete the log directories (REGGIE_DB and RMID_LOGS), and try again. If

you still get errors, there's always the friendly folks at The

Big Moose Saloon who are more than willing to help you out if asked nicely

;)

If all went fine, you shouldn't need to touch these processes for the rest of

the article. If for some reason you do need to stop them, go ahead and start

them again when you want to continue (except for the lookup service which

doesn't need to be restarted).

Gentlemen, start your IDEs!

Now that we have the necessary infrastructure running, we can move on to the

fun stuff! We'll start by specifying the service we're going to use, the

Fibonacci sequence calculator service, write some implementations of that

service, and then proceed to writing the Jini-specific stuff -- the Jini client

and the Jini service.

The interface

The first thing to do is to specify the contract for the service, that is,

the Java interface the clients will expect to get an implementation for: Fibonacci.java:

package com.javaranch.jiniarticle.service.api;

import java.rmi.RemoteException;

public interface Fibonacci {

long calculate(int n) throws RemoteException;

}

Note that I've defined the calculate() method to throw a

java.rmi.RemoteException. Even though the implementation wouldn't connect

to remote systems during the method call, it's a good practice to explicitly

tell the client that the service might be implemented using remote method

invocations, web services, and so on -- next week that may very well be the

case!

The implementations

Now that we have an interface to implement, let's see what kind of

implementations we can come up with.

First of all, I know two ways of performing the actual calculation: one in

which the sequence is calculated with brute force (summing up numbers one by one

with recursive method calls), and one in which the result is calculated using an

approximation formula (a bit of cheating but the result is very accurate until

somewhere around F(80) or so...). Let's see what those two implementations look

like.

The first implementation, FibonacciBasicImpl, is the brute force way.

It refuses to calculate sequences beyond F(50) because of the duration will

increase dramatically when n approaches such numbers. FibonacciBasicImpl.java:

package com.javaranch.jiniarticle.service.impl;

import java.io.Serializable;

import com.javaranch.jiniarticle.service.api.Fibonacci;

public class FibonacciBasicImpl implements Serializable, Fibonacci {

public long calculate(int n) {

if (n < 1 || n > 50) {

return -1;

} else if (n <= 2) {

return 1;

} else {

return calculate(n - 1) + calculate(n - 2);

}

}

}

The second implementation, FibonacciFloatImpl, is the easy-way-out

method of using an approximation formula. This particular implementation

performs well as long as n is small enough for the result to fit in a

long. FibonacciFloatImpl.java:

package com.javaranch.jiniarticle.service.impl;

import java.io.Serializable;

import com.javaranch.jiniarticle.service.api.Fibonacci;

public class FibonacciFloatImpl implements Fibonacci, Serializable {

/** The square root of 5 is used a lot in this formula... */

private static final double SQRT5 = Math.sqrt(5.0);

public long calculate(int n) {

if (n < 1) {

return -1;

} else if (n <= 2) {

return 1;

} else {

double fpResult =

Math.pow((1 + SQRT5) / 2, (double) n) / SQRT5

- Math.pow((1 - SQRT5) / 2, (double) n) / SQRT5;

return Math.round(fpResult);

}

}

}

While we're at it, why not add a slightly optimized version of our accurate

but dead-slow recursive implementation... FibonacciMemorizingImpl.java:

package com.javaranch.jiniarticle.service.impl;

import java.io.Serializable;

import java.util.HashMap;

import java.util.Map;

import com.javaranch.jiniarticle.service.api.Fibonacci;

public class FibonacciMemorizingImpl implements Fibonacci, Serializable {

private static final Map preCalculated = new HashMap();

static {

preCalculated.put(new Integer(1), new Long(1));

preCalculated.put(new Integer(2), new Long(1));

}

public long calculate(int n) {

Long value = (Long) preCalculated.get(new Integer(n));

if (value != null) {

return value.longValue();

} else {

long v = calculate(n - 1) + calculate(n - 2);

preCalculated.put(new Integer(n), new Long(v));

return v;

}

}

}

Alright. Now we have already three different implementations of the Fibonacci

sequence -- and our Fibonacci interface. These implementations differ in

how they perform the mathematical calculation, which indicates that it might be

useful to register them with the lookup service using entries describing

their performance (fast/slow) and accuracy (accurate/approximate) so that the

client can pick the one implementation that best suits her needs.

However, these implementations will all be executing solely within the

client's JVM as none of them connects back to the service provider for

performing the calculation. Maybe we should provide yet another implementation

of the Fibonacci interface which acts as a proxy for a remote service

doing the actual calculation? That way we would get one more differentiator into

the mix and see how a thin Jini proxy can be implemented. I'll use RMI since

it's the easiest way to go about it. Using raw socket communication, web

services or some other method of communication is a perfectly valid choice as

well, if that suits better in your environment (due to firewalls, existing APIs,

etc.) -- you just need to prime your service proxy with the necessary

information to open a connection back to the actual service implementation.

First of all, here's the actual service implementation implemented as a

remote RMI object and the remote interface used to access the service: FibonacciRemote.java:

package com.javaranch.jiniarticle.service.impl;

import com.javaranch.jiniarticle.service.api.Fibonacci;

import java.rmi.Remote;

public interface FibonacciRemote extends Fibonacci, Remote {

}

As you can see, the remote interface extends the Fibonacci interface

instead of providing a different signature for the "real" service invocation.

FibonacciRemoteImpl.java:

package com.javaranch.jiniarticle.service.impl;

import java.io.Serializable;

import java.rmi.RemoteException;

import java.rmi.server.UnicastRemoteObject;

import java.util.HashMap;

import java.util.Map;

public class FibonacciRemoteImpl extends UnicastRemoteObject implements FibonacciRemote, Serializable {

private static final Map preCalculated = new HashMap();

static {

preCalculated.put(new Integer(1), new Long(1));

preCalculated.put(new Integer(2), new Long(1));

}

public FibonacciRemoteImpl() throws RemoteException {

}

public long calculate(int n) throws RemoteException {

System.out.println(getClass().getName() + " calculating f(" + n + ")...");

return fibonacci(n);

}

public long fibonacci(int n) throws RemoteException {

Long value = (Long) preCalculated.get(new Integer(n));

if (value != null) {

return value.longValue();

} else {

long v = fibonacci(n - 1) + fibonacci(n - 2);

preCalculated.put(new Integer(n), new Long(v));

return v;

}

}

}

Great. Now that we have the backend service implementation all set, the only

thing left is to let the client use our service somehow. This can be

accomplished by registering a thin service proxy with the lookup service: FibonacciRemoteProxy.java:

package com.javaranch.jiniarticle.service.impl;

import com.javaranch.jiniarticle.service.api.Fibonacci;

import java.io.Serializable;

import java.rmi.RemoteException;

public class FibonacciRemoteProxy implements Fibonacci, Serializable {

private FibonacciRemote backend;

public FibonacciRemoteProxy() {

// create a default implementation of the backend

backend = new FibonacciRemote() {

public long calculate(int n) {

return -1;

}

};

}

public FibonacciRemoteProxy(FibonacciRemote backend) {

this.backend = backend;

}

public long calculate(int n) throws RemoteException {

System.out.println("FibonacciRemoteProxy proxying f(" + n + ") to the backend...");

return backend.calculate(n);

}

}

In practice, the client downloads this thin proxy from the lookup service and

uses it like any other implementation's service item. This one just happens to

make remote method invocations to the real service implementation. I put a

couple of System.out.println's in there so that we can actually see with our own

eyes where each piece of code gets executed.

If we would've chosen raw sockets instead of RMI for our remote service

implementation, the proxy class would be primed with a hostname, port number

pair instead of handing out an RMI stub for the real service implementation.

As you may have noticed, all of these implementations have two things in

common: 1) the service item visible to the client implements the

Fibonacci interface, and 2) they implement java.io.Serializable

for enabling the client to download them over the network in the first place

(actually, not implementing Serializable would've caused trouble already

when trying to register the item with a lookup service...).

Right. Now we have a service interface and a number of different, alternative

implementations for that service. However, we haven't yet seen how to get these

implementations registered with a lookup service. That's our next topic.

The service provider

In order for anyone to be able to use our services, we need to publish them

into a lookup service accessible by the client. The following class, invoked

from a command prompt, discovers a lookup service and registers one instance of

each of our service implementations with that lookup service. I have omitted

parts of the code for brevity (the full source code is available from the

References section) but I'm sure you'll be able to follow. Service.java:

package com.javaranch.jiniarticle.service;

import com.javaranch.jiniarticle.service.api.Fibonacci;

import com.javaranch.jiniarticle.service.impl.*;

import net.jini.core.discovery.LookupLocator;

import net.jini.core.entry.Entry;

import net.jini.core.lease.Lease;

import net.jini.core.lookup.ServiceID;

import net.jini.core.lookup.ServiceItem;

import net.jini.core.lookup.ServiceRegistrar;

import net.jini.core.lookup.ServiceRegistration;

import net.jini.lookup.entry.Name;

import java.io.*;

import java.net.MalformedURLException;

import java.util.Date;

public class Service {

public static void main(String[] args) throws Exception {

System.setSecurityManager(new java.rmi.RMISecurityManager());

// lookup a "registrar" for a known lookup service with

// which to register our service implementations

ServiceRegistrar registrar = lookupRegistrar();

register(registrar, new FibonacciBasicImpl());

register(registrar, new FibonacciFloatImpl());

register(registrar, new FibonacciMemorizingImpl());

FibonacciRemote backend = new FibonacciRemoteImpl();

long lease = register(registrar, new FibonacciRemoteProxy(backend));

lease = (lease - System.currentTimeMillis()) / 1000;

log("----- " + lease + " seconds until the last lease expires...");

}

private static ServiceRegistrar lookupRegistrar() {

try {

// create a "unicast lookup locator" for a known lookup service

LookupLocator locator = new LookupLocator("jini://localhost:4160");

// ask for a "registrar" to register services with

return locator.getRegistrar();

} catch (ClassNotFoundException e) {

log(e.getMessage());

} catch (MalformedURLException e) {

log(e.getMessage());

} catch (IOException e) {

log(e.getMessage());

}

return null;

}

private static long register(ServiceRegistrar reg, Fibonacci impl) {

log("----- Registering " + impl.getClass().getName() + " ...");

long lease = -1;

try {

// read the service ID from disk, if this isn't the first

// time we're registering this particular implementation

ServiceID serviceID = readServiceId(impl.getClass().getName());

// Constructing a ServiceItem to register

Entry[] entries = new Entry[1];

entries[0] = new Name(impl.getClass().getName());

ServiceItem item = new ServiceItem(serviceID, impl, entries);

// register the service implementation with the registrar

ServiceRegistration registration =

reg.register(item, Lease.FOREVER); // maximum lease

lease = registration.getLease().getExpiration();

log("Registered service "

+ registration.getServiceID().toString()

+ "\n Lookup service: "

+ reg.getLocator().getHost()

+ ":"

+ reg.getLocator().getPort()

+ "\n Lease: " + new Date(lease));

// write the service ID (back) to disk for later reference

// so that we won't be re-registering the same implementation

// to the same lookup service with different service IDs

persistServiceId(impl.getClass().getName(),

registration.getServiceID().toString());

} catch (Exception e) {

log("ERROR: " + e.getMessage());

}

return lease;

}

}

The Service class reuses existing service IDs by writing newly

assigned service IDs into a "ServiceItemClass.sid" file based on the service

item class name and tries to read the previously assigned and persisted service

ID upon subsequent executions of the program.

Notice how in the main() method the RMI-based thin proxy is created by

priming it with the backend implementation's RMI stub while the standalone

service items are registered as-is.

The client

Brilliant! The last thing on our to-do list is the client, the application

that needs someone to calculate a Fibonacci sequence for him.

Since this is an example, which gives us a little artistic freedom, we'll

make our client use every single one of our implementations in turn instead of

just picking one and using that. Client.java:

package com.javaranch.jiniarticle.client;

import java.io.IOException;

import java.net.MalformedURLException;

import java.rmi.RemoteException;

import net.jini.core.discovery.LookupLocator;

import net.jini.core.lookup.ServiceItem;

import net.jini.core.lookup.ServiceMatches;

import net.jini.core.lookup.ServiceRegistrar;

import net.jini.core.lookup.ServiceTemplate;

import com.javaranch.jiniarticle.service.api.Fibonacci;

public class Client {

public static void main(String[] args) {

// we need to download code so we need a security manager

System.setSecurityManager(new java.rmi.RMISecurityManager());

// locate service implementations from the Jini network

Fibonacci[] services = locateFibonacciServices();

if (services.length == 0) {

System.err.println("Couldn't locate a Fibonacci service!");

System.exit(1);

}

// use the service implementations and print out their results and performances

for (int j = 0; j < services.length; j++) {

System.out.println("-----\n " + services[j].getClass().getName());

for (int i = 0; i < args.length; i++) {

int n = Integer.parseInt(args[i]);

Result result = calculate(n, services[j]);

System.out.println(" f(" + n + ") = " + result.result + "\t(" + result.duration + "ms)");

}

}

}

// a simple data structure for passing around a pair of calculation result and duration

static class Result {

long result;

long duration;

}

private static Result calculate(int n, Fibonacci implementation) {

Result result = new Result();

result.duration = System.currentTimeMillis();

try {

result.result = implementation.calculate(n);

} catch (RemoteException e) {

System.err.println(e.getMessage());

result.result = -1;

}

result.duration = System.currentTimeMillis() - result.duration;

return result;

}

private static Fibonacci[] locateFibonacciServices() {

try {

String host = "jini://localhost:4160";

System.out.println("Creating a LookupLocator for " + host);

LookupLocator locator = new LookupLocator(host);

System.out.println("Obtaining a ServiceRegistrar from the LookupLocator");

ServiceRegistrar registrar = locator.getRegistrar();

// create a "service template" describing the service for

// which we're looking for available implementations

ServiceTemplate tmpl =

new ServiceTemplate(

null,

new Class[] { Fibonacci.class },

null);

// perform a lookup based on the service template

ServiceMatches matches = registrar.lookup(tmpl, 50);

System.out.println("Found " + matches.items.length + " matching services");

Fibonacci[] services = new Fibonacci[matches.items.length];

for (int i = 0; i < matches.items.length; i++) {

ServiceItem item = matches.items[i];

services[i] = (Fibonacci) item.service;

System.out.println(" [" + i + "] " + services[i].getClass().getName());

}

return services;

} catch (MalformedURLException e) {

System.err.println("[ERROR] " + e.getMessage());

} catch (IOException e) {

System.err.println("[ERROR] " + e.getMessage());

} catch (ClassNotFoundException e) {

System.err.println("[ERROR] " + e.getMessage());

}

return new Fibonacci[0];

}

}

The client application you just saw basically looks up every service from a

known lookup service that implements the Fibonacci interface, proceeds to

invoke each of them for each n given as a command-line argument, and

prints out the calculations' results and durations (just for fun:).

Running the example

In order to run our example code, we need to have a lookup service running at

localhost:4160, an RMI server running at localhost:1098, and an

HTTP server running somewhere for the client to download the service

implementations (or their proxies) as well as the lookup service's client stubs.

This setup can be accomplished by running the batch scripts presented earlier

in this article in the following order (remember that you only need to start

each of these once -- if you started these services back in "Installing the

Jini Starter Kit", you don't need to do this again):

- startRmiServer.bat

- startHttpServer.bat

- startLookupService.bat

Now that we have the infrastructure in place, it's time to build and deploy

our example code. The easiest way to do this is to run the default target of the

Ant build script provided as part of the

article's resources archive:

C:\JiniArticle>

ant

The default target basically compiles all the source code, packages it into a

couple of JAR files in the "build" directory, and copies the necessary files

into the "webroot" directory from where the HTTP server will serve them to

whoever needs to download them.

Next, we need to run the Service class in order to register our

service implementations with the newly started lookup service. You could do this

via the command-prompt or rely on the "run.service" target in the build script.

Here's a batch script for registering the service implementations via

command-prompt just to show what the classpath should include and what JVM

arguments are required: startServices.bat:

@echo off

@rem -- Running the service registration program requires the

@rem -- com.javaranch.jiniarticle.service.* classes (service.jar)

@rem -- in its classpath as well as the Jini libraries

set CLASSPATH=-classpath build/service.jar

set CLASSPATH=%CLASSPATH%;lib/jini-core.jar

set CLASSPATH=%CLASSPATH%;lib/jini-ext.jar

set POLICY=-Djava.security.policy=policy.all

set CODEBASE=-Djava.rmi.server.codebase=http://localhost:8080/

java %CLASSPATH% %POLICY% %CODEBASE% com.javaranch.jiniarticle.service.Service

As you can see, the service registration program needs to be given a

codebase. Why is that? The codebase is needed because any code we're about to

"upload" to the lookup service has to carry information about where to download

the rest of the code. If we would've packed up our code into a JAR file, the

codebase property used here should point to a direct URL to that particular JAR

file. However, since we're serving individual classes from the web server's root

directory, the codebase property points to that context. (Note: the

codebase must end with a slash when using this approach)

Right. Let's register some Jini services!

C:\JiniArticle>

startServices

If all goes well, you should be looking at a couple of debugging messages

informing that our service implementations are successfully registered with the

lookup service: C:\JiniArticleProject>startServices.bat

19:31:21 ----- Registering com.javaranch.jiniarticle.service.impl.FibonacciBasicImpl ...

19:31:21 Registered service 858ad2ab-2594-49f1-8a49-a2a4087ff568

Lookup service: ACN931JT0J:4160

Lease expires: Sun Mar 28 19:36:21 EEST 2004

19:31:21 ----- Registering com.javaranch.jiniarticle.service.impl.FibonacciFloatImpl ...

19:31:22 Registered service 2f96135e-1230-4cb6-871d-946a002eb1ae

Lookup service: ACN931JT0J:4160

Lease expires: Sun Mar 28 19:36:21 EEST 2004

19:31:22 ----- Registering com.javaranch.jiniarticle.service.impl.FibonacciMemorizingImpl ...

19:31:22 Registered service 507c05ac-52e0-4d4b-b87e-f4e7f06bdad4

Lookup service: ACN931JT0J:4160

Lease expires: Sun Mar 28 19:36:22 EEST 2004

19:31:22 ----- Registering com.javaranch.jiniarticle.service.impl.FibonacciRemoteProxy ...

19:31:22 Registered service 4902a68e-c56a-4863-9e62-ed18e1f22729

Lookup service: ACN931JT0J:4160

Lease expires: Sun Mar 28 19:36:22 EEST 2004

19:31:22 ----- 299 seconds until the last lease expires...

With the service implementations registered, it's time to launch the client

and watch how the magic works! Again, an Ant target named "run.client" is

provided which asks each implementation to calculate a couple of n's.

Here's the corresponding command-prompt version to illustrate what the client

JVM needs to know: startClient.bat:

@echo off

@rem -- Running the client program requires the service's interface classes

@rem -- (service-api.jar) in its classpath as well as the Jini libraries.

@rem -- Furthermore, the client's JVM needs to be instructed to use a

@rem -- policy file which permits downloading code from the lookup service

@rem -- and connecting to the service provider (FibonacciRemoteImpl).

set CLASSPATH=-classpath build/client.jar

set CLASSPATH=%CLASSPATH%;build/service-api.jar

set CLASSPATH=%CLASSPATH%;lib/jini-core.jar

set CLASSPATH=%CLASSPATH%;lib/jini-ext.jar

set POLICY=-Djava.security.policy=policy.all

java %CLASSPATH% %POLICY% com.javaranch.jiniarticle.client.Client %1 %2 %3 %4 %5

Running this script with

C:\JiniArticle>

startClient 1 20 35

should result in each service implementation calculating the results for

f(1), f(20) and f(35), respectively. Note that it takes some time for the

FibonacciBasicImpl to calculate n's beyond 30...

Here's the output I got running the above script: C:\JiniArticleProject> startClient 1 20 35

Creating a LookupLocator for jini://localhost:4160

Obtaining a ServiceRegistrar from the LookupLocator

Found 4 matching services

[0] com.javaranch.jiniarticle.service.impl.FibonacciRemoteProxy

[1] com.javaranch.jiniarticle.service.impl.FibonacciMemorizingImpl

[2] com.javaranch.jiniarticle.service.impl.FibonacciFloatImpl

[3] com.javaranch.jiniarticle.service.impl.FibonacciBasicImpl

-----

com.javaranch.jiniarticle.service.impl.FibonacciRemoteProxy

FibonacciRemoteProxy proxying f(1) to the backend...

f(1) = 1 (10ms)

FibonacciRemoteProxy proxying f(20) to the backend...

f(20) = 6765 (10ms)

FibonacciRemoteProxy proxying f(35) to the backend...

f(35) = 9227465 (0ms)

-----

com.javaranch.jiniarticle.service.impl.FibonacciMemorizingImpl

f(1) = 1 (0ms)

f(20) = 6765 (0ms)

f(35) = 9227465 (0ms)

-----

com.javaranch.jiniarticle.service.impl.FibonacciFloatImpl

f(1) = 1 (0ms)

f(20) = 6765 (0ms)

f(35) = 9227465 (0ms)

-----

com.javaranch.jiniarticle.service.impl.FibonacciBasicImpl

f(1) = 1 (0ms)

f(20) = 6765 (0ms)

f(35) = 9227465 (421ms)

C:\JiniArticleProject>

Summary

We've just gone through the main concepts and architecture of the Jini

network technology and seen a working example of its usage. However, there's a

lot more to Jini than what you've seen in this article! We passed with a mention

some major features such as distributed events, the transaction service, and

perhaps most importantly, JavaSpaces. Maybe we'll go into those in a follow-up

article or two ;)

Jini success stories

So, we have a technology named Jini which can do all sorts of cool things, but

is it being used in the Real World?? As you've probably guessed by now, the

answer is yes.

A good example of a real world application of Jini is the cluster

implementation of Macromedia's J2EE application server, JRun 4, where the nodes

in a cluster are being discovered automatically using the Jini discovery service

and communicate with each other through the Jini proxies as described in the

previous section.

There are similar peer-to-peer communication protocols that could've done the

job equally well, such as JGroups or even

JXTA, but the fact that Jini is a mature

standard differentiates it from the competition. Furthermore, Jini brings a lot

of advanced features such as distributed transactions and events.

Take a look at the links provided in References and Sun Microsystems'

list of Jini

success stories for more about the application of Jini technologies. Would

you be surprised to learn that the U.S. military has used Jini heavily in their

training and simulation systems?

Resources and references

Discuss this article in The Big Moose Saloon!

Return to Top

|